How Hardware Acceleration Boosts Machine Learning

In our fast-paced digital world, machine learning (ML) is like a magic wand that powers everything from your smartphone's voice assistant to predicting what movie you'll want to watch next on your favorite streaming service. However, for all its magic, machine learning requires a lot of computational power to work effectively. This is where hardware acceleration comes into the picture, acting as a turbo boost for machine learning processes. Let's dive into how hardware acceleration is supercharging machine learning, making it faster, smarter, and more accessible than ever before—all explained in simple English.

The Need for Speed

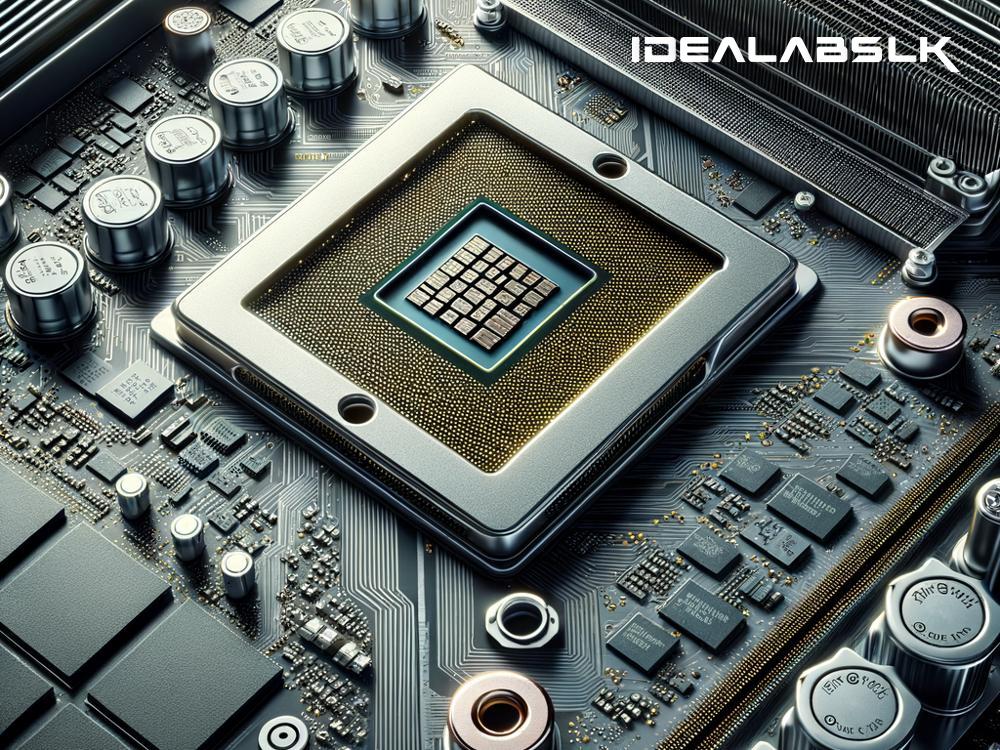

At its core, machine learning involves analyzing massive amounts of data to identify patterns, make decisions, or predict outcomes. This process is incredibly data and computation-heavy. Initially, traditional CPUs (Central Processing Units), the brains of our computers, handled this task. However, as machine learning models became more complex and the data grew exponentially, CPUs alone couldn't keep up with the need for speed and efficiency. Enter hardware acceleration.

What is Hardware Acceleration?

Hardware acceleration refers to the use of specific hardware to perform some functions more efficiently than is possible in software running on a more general-purpose CPU. In the context of machine learning, hardware acceleration lets specialized hardware take over certain tasks from the CPU, significantly speeding up the ML processes. The most common types of hardware accelerators for machine learning are GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units).

GPU: The Game Changer

Although initially designed to handle computer graphics and visual computing, GPUs have become a cornerstone for machine learning acceleration. Unlike CPUs, which are designed to handle a wide variety of tasks but can only execute a few instruction streams simultaneously, GPUs are built to process many tasks at once, thanks to their thousands of smaller, more efficient cores. This makes them exceptionally good at handling the parallel processing requirements of machine learning algorithms, delivering a substantial performance boost. For tasks like training deep learning models or analyzing large datasets, GPUs can speed up processing times from weeks to mere hours.

TPU: The Machine Learning Workhorse

Further along came the TPU, a hardware accelerator specifically designed by Google for its neural network machine learning processes. TPUs are tailored for TensorFlow, Google's open-source machine learning framework, and are optimized to perform the high-volume, simple calculations typical in machine learning extremely efficiently. They offer even faster processing times and greater energy efficiency than GPUs for certain machine learning tasks. TPUs represent the next step in the evolution of hardware acceleration for ML, showing just how specialized and efficient these tools can become.

The Impact of Hardware Acceleration on Machine Learning

The effect of hardware acceleration on machine learning is profound. First and foremost, it means much faster processing times. This allows for more experiments, iterations, and ultimately, more rapid progress in developing and refining machine learning models. It also means more complex models can be developed, as the hardware can handle the increased computational load. This leads to more accurate predictions and more sophisticated AI applications.

Moreover, hardware acceleration makes machine learning more accessible. What once required a supercomputer can now be done on a desktop or even a high-end laptop equipped with the right GPU. This democratization of machine learning tools and capabilities opens up the field to a broader range of researchers, developers, and companies, driving innovation and application development.

Looking Ahead

As we look to the future, it's clear that hardware acceleration will continue to play a crucial role in advancing machine learning. With the advent of edge computing, where processing takes place on devices at the edge of the network rather than in a centralized data center, the need for efficient, powerful hardware accelerators will only grow. The development of new, even more, efficient accelerators, whether they be next-generation GPUs, TPUs, or entirely new forms of hardware, will be key to unlocking the full potential of machine learning.

Conclusion

In conclusion, hardware acceleration is a game-changer for machine learning, providing the computational muscle needed to make AI smarter, faster, and more efficient. As technology continues to evolve, the synergy between machine learning algorithms and specialized hardware will undoubtedly open new frontiers in artificial intelligence, making what once seemed like science fiction a reality. So next time you ask your voice assistant a question, remember, there's some serious hardware power behind that seemingly simple answer.