Demystifying the Role of Data Pipelines in Machine Learning Workflows

In today's digital age, machine learning (ML) has become a cornerstone of technology, pushing the boundaries of what machines can do. From recommending your next favorite movie to driving autonomous cars, machine learning is everywhere. But, have you ever wondered what powers these smart systems behind the scenes? Enter the world of data pipelines, the unsung heroes in the realm of machine learning. Let's break down the concept of data pipelines in machine learning workflows in a simple, jargon-free manner.

What is a Data Pipeline?

Imagine you're trying to make a delicious pie. You need various ingredients from your fridge (data sources), and you must mix them in the right order to create your filling (the data processing), before finally baking it in the oven to get the final dish (the end result or insight). A data pipeline does something similar with data. It's a series of steps or processes where data is collected from different sources, then cleaned, transformed, and fed into a machine learning model, just like preparing, mixing, and baking the ingredients to get your pie.

Why Are Data Pipelines Important in Machine Learning?

Without data pipelines, machine learning would be like trying to bake that pie without preparing or mixing the ingredients—it just wouldn't work. Data in the real world is messy and inconsistent. It comes in various formats and often contains errors or irrelevant information. If we feed this raw data directly into machine learning models, the results would be as disappointing as a pie made from unprepared ingredients. Here’s where data pipelines come to the rescue, ensuring the data is in the right form for the models to digest and learn from.

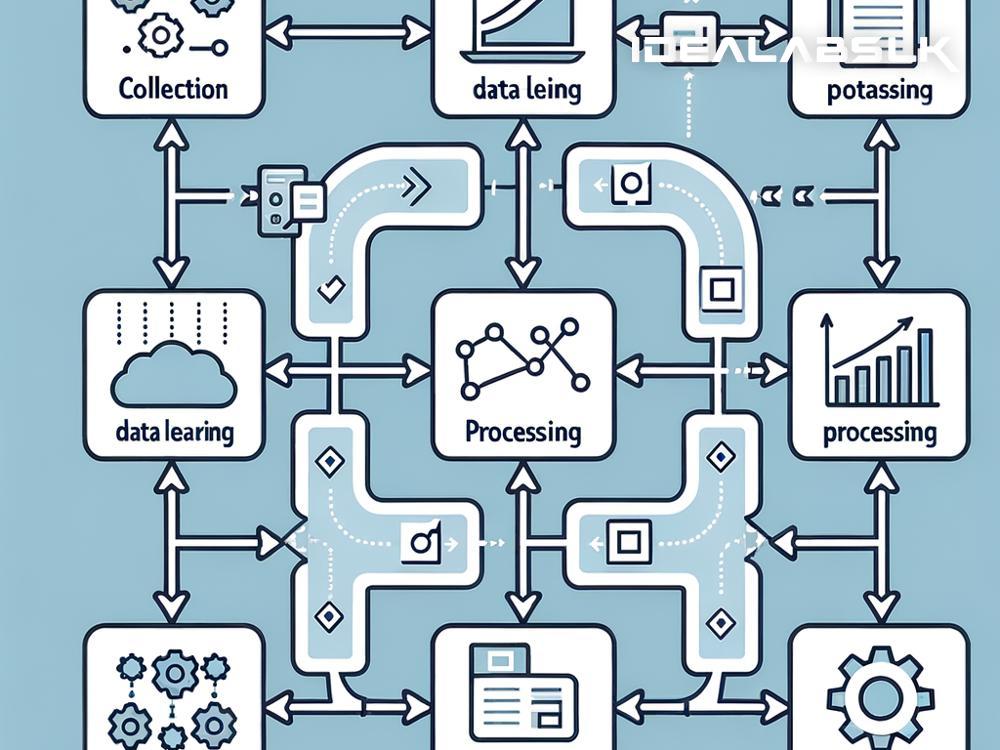

The Stages of a Data Pipeline in Machine Learning

To understand data pipelines better, let's walk through their key stages:

-

Data Collection: This is the first step, where data is gathered from various sources. Like our pie ingredients, data can come from many places—databases, online sources, sensors, etc.

-

Data Cleaning and Preparation: Not all data collected is useful. This stage involves removing irrelevant parts, correcting errors, and converting the data into a format that’s easier for the model to understand; think of it as cleaning and chopping your ingredients.

-

Data Transformation: Here, the prepared data is transformed. This could involve normalizing the data (ensuring that all the data is on a similar scale) or converting data types. It's akin to mixing your ingredients in the right proportion.

-

Data Modeling: This is where the magic happens. The transformed data is fed into the machine learning model, which learns from the data and makes predictions or decisions. It's the equivalent of putting your pie in the oven to bake.

-

Monitoring and Maintenance: Just like you might check the pie to ensure it's baking perfectly, data pipelines need to be monitored and maintained. This ensures they work efficiently and adapt to any new data or changes in the data source.

The Benefits of Efficient Data Pipelines

- Improved Accuracy: Well-prepared data leads to more accurate models since the quality of data input directly affects the output.

- Time Efficiency: Automated data pipelines save valuable time in the data prep stage, allowing data scientists and ML engineers to focus more on model design and less on data wrangling.

- Scalability: Data pipelines can handle increasing amounts of data efficiently, making it easier to scale ML projects.

- Flexibility: They can easily be adjusted or expanded to include new data sources or change the data processing steps.

The Final Slice

In summary, data pipelines are vital in machine learning, acting as the backbone that supports the entire process. They ensure that the data feeding into machine learning models is clean, well-prepared, and in the right format, much like preparing ingredients meticulously for a perfect pie. With the growth of machine learning applications across industries, the role of data pipelines has become increasingly important, proving that good data practices are not just preferable; they are essential for success.

Understanding and implementing efficient data pipelines can seem intimidating at first, but with the right tools and practices, it's entirely achievable. Plus, the rewards—accurate models, efficient workflows, and the ability to scale—are well worth the effort. As we continue to explore and expand the capabilities of machine learning, the significance of robust data pipelines will only grow, marking a new era of innovation powered by well-prepared, high-quality data. Let's cherish and invest in the unsung heroes of machine learning, enabling smarter systems that make our lives easier and more connected.